Monday, 17 June 2013

NFDP13: Plenary Panel Two: Where do we want to go?

This is my final post about the Now and Future of Data Publishing symposium and is a write-up of my speaking notes from the last plenary panel of the day.

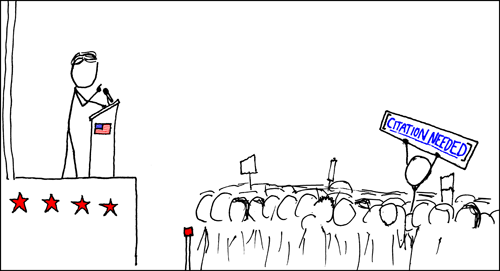

As before, I didn't have any slides, but used the above xkcd picture as a backdrop, because I thought it summed things up nicely!

My topic was: "Data and society - how can we ensure future political discussions are evidence led?"

"I work for the British Atmospheric Data Centre, and we happen to be one of the data nodes hosting the data from the 5th Climate Model Intercomparison Project (CMIP5). What this means is that we're hosting and managing about 2 Petabytes worth of climate model output, which will feed into the next Intergovernmental Panel on Climate Change's Assessment Report and will be used national and local governments to set policy given future projections of climate change.

But if we attempted to show politicians the raw data from these model runs, they'd probably need to go and have a quiet lie down in a darkened room somewhere. The raw data is just too much and too complicated, for anyone other than the experts. That's why we need to provide tools and services. But we also need to keep the raw data so the outputs of those tools and services can be verified.

Communication is difficult. It's hard enough to cross scientific domains, let alone the scientist/non-scientist divide. A repositories, we collect metadata bout the datasets in our archives, but this metadata is often far to specific and specialised for a member of the general public or a science journalist to understand. Data papers allow users to read the story of the dataset and find out details of how and why it was made, while putting it into context. And data papers are a lot easier for humans to read that an xml catalogue page.

Data publication can help us with transparency and trust. Politicians can't be scientific experts - they need to be political experts. So they need to rely on advisors who are scientists or science journalists for that advice - and preferably more than one advisor.

Making researchers' data open means that it can be checked by others. Publishing data (in to formal data journal sense) means that it'll be peer-reviewed, which (in theory at least) will cut down on fraud. It's harder to fake a dataset than a graph - I know this personally, because I spent my PhD trying to simulate radar measurements of rain fields, with limited success!)

With data publishing, researchers can publish negative results. The dataset is what it is and can be published even if it doesn't support a hypothesis - helpful when it comes to avoiding going down the wrong research track.

As for what we, personally can do? I'd say: lead by example. If you're a researcher, be open with your data (if you can. Not all data should be open, for very good reason, for example if it's health data and personal details are involved). If you're and editor, reviewer, or funder, simply ask the question: "where's the data?"

And everyone: pick on your MP. Query the statistics reported by them (and the press), ask for evidence. Remember, the Freedom of Information Act is your friend.

And never forget, 87.3% of statistics are made up on the spot!"

_________________________________________________________

Addendum:

After a question from the audience, I did need to make clear that when you're pestering people about where their data is, be nice about it! Don't buttonhole people at parties or back them into corners. Instead of yelling "where's your data?!?" ask questions like: "Your work sounds really interesting. I'd love to know more, do you make your data available anywhere?" "Did you hear about this new data publication thing? Yeah, it means you can publish your data in a trusted repository and get a paper out of it to show the promotion committee." Things like that.

If you're talking the talk, don't forget to walk the walk.

Thursday, 13 June 2013

NFDP13: Panel 3.1 Citation, Impacts, Metrics

This was my title and abstract for the panel session on Citation, Impact, Metrics at the Now and Future of Data Publishing event in Oxford on 22nd May 2013.

Data Citation Principles

I’ll talk about data citation principles and the work done by the CODATA task group on Data Citation. I’ll also touch on the implications of data publication for data repositories and for the researchers who create the data.

Data Citation Principles

I’ll talk about data citation principles and the work done by the CODATA task group on Data Citation. I’ll also touch on the implications of data publication for data repositories and for the researchers who create the data.

And here is a write-up of my presentation notes:

(No, I didn't have any slides - I just used the above PhD comic as a background)

"Hands up if you think data is important. (Pretty much all the audience's hands went up) That's good!

Hands up if you've ever written a journal paper... (Some hands went up) ... and feel you've got credit. (some hands went down again)

Hands up if you've ever created a dataset... (less hands up).... and got credit. (No hands up!)

So, if data's so important, why aren't the creators getting the credit for it?

We're proposing data citation and publication as a method to give researchers credit for their efforts in creating data. The problem is that citation is designed to link one paper to another - that's it. And those papers are printed on and frozen in dead tree format. We've loaded citation with other purposes, for example attribution, discovery, credit. But citation isn't really a good fit for data, because data is so changeable and/or takes such a long time and so many people to create it.

But to make data publication and citation work, data needs to be frozen to become the version of record that will allow the science to become reproducible. Yes, this might be considered a special case of dealing with data, but it's an important one. The version of record can always link to the most up-to-date version of the dataset after all.

Research is getting to be all about impact - how a researcher's work affects the rest of the world. To quantify impact we need metrics. Citation counts for papers are well known and well established metrics, which is why we're piggybacking on them for data. Institutions, funders and repositories all need metrics to support their impact claims too. For example a repository manager can use citation to track how researchers are using the data downloaded from the repository.

The CODATA task group on data citation is an international group. We've written a report: "Citation of data: the current state of practice, policy and technology". It's currently with the external reviewers and we're hoping to release it this summer. It's a big document ~190 pages. In it there are ten data citation principles:

- Status of Data: Data citations should be accorded the same importance in the scholarly record as the citation of other objects.

- Attribution: A citation to data should facilitate giving scholarly credit and legal attribution to all parties responsible for those data.

- Persistence: Citations should refer to objects that persist.

- Access: Citations should facilitate access to data by humans and by machines.

- Discovery: Citations should support the discovery of data.

- Provenance: Citations should facilitate the establishment of provenance of data.

- Granularity: Citations should support the finest-grained description necessary to identify the data.

- Verifiability: Citations should contain information sufficient to identify the data unambiguously.

- Metadata Standards: A citation should employ existing metadata standards.

- Flexibility: Citation methods should be sufficiently flexible to accommodate the variant practices among communities.

None of these are particularly controversial, though as we try citing more and more datasets, the devil will be in the detail.

Citation does have the benefit that researchers already are used to doing it as part of their standard practice. The technology also exists, so what we need to do is encourage the culture change so data citation is the norm. I think we're getting there."

The Now and Future of Data Publishing - Oxford, 22 May 2013

Book printing in the 15th century - Wikimedia Commons

St Anne's College, Oxford, was host to a large group of researchers, librarians, data managers and academic publishers for the Now and Future of Data Publishing symposium, funded by the Jisc Managing Research Data programme in partnership with BioSharing, DataONE, Dryad, the International Association of Scientific, Technical and Medical Publishers, and Wiley-Blackwell.

There was a lot of tweeting done over the course of the day (#nfdp13) so I won't repeat it here. (I've made a storify of all my tweets and retweets - unfortunately storify couldn't seem to find the #nfdp13 tweets for other people, so I couldn't add them in.) I was also on two of the panels, so may have missed a few bits of information there - it's hard to tweet when you're sitting on a stage in front of an audience!

A few things struck me about the event:

- It was really good to see so many enthusiastic people there!

- The meme on the difference between *p*ublication (i.e. on a blog post) and *P*ublication (i.e. in a peer-reviewed journal) is spreading.

- I've got a dodgy feeling about the use of "data descriptors" instead of "data papers" in Nature's Scientific Data - it feels like publishing the data in that journal doesn't give it the full recognition it deserves. Also, as a scientist, I want to publish papers, not data descriptors. I can't report a data descriptor to REF, but I can a data paper.

- It wasn't just me showing cartoons and pictures of cats

- I could really do with finding the time to sit down and think properly about Parsons' and Fox's excellent article Is Data Publication the Right Metaphor? (and maybe even the time to write a proper response).

- Only archiving the data that's directly connected with a journal article risks authors only keeping the cherry-picked data they used to justify their conclusions. Also it doesn't cover the vast range of scientific data that is important and irrepreducible, but isn't the direct subject of a paper. Nor does it offer any solution for the problem of negative data. Still, archiving the data used in the paper is a good thing to do - it just shouldn't be the only archiving done.

- Our current methods of scientific publication have worked for 300 years - that's pretty good going, even if we do need to update them now!

Subscribe to:

Comments (Atom)